The Model Context Protocol (MCP) is an open-standard communications protocol designed to let AI assistants seamlessly connect to external data and tools. In simple terms, MCP acts like a “USB-C port” for AI – a universal interface that all tools and data sources can plug into. By defining a common format for requests and responses, MCP lets developers build reusable connectors between large language models (LLMs) and everything from databases and code repositories to web services and local files.

This matters because modern AI models often hit a wall when it comes to getting real-time context or taking actions outside their own interface. Without MCP, each new integration (say, pulling data from Slack or saving to Google Drive) requires custom engineering. MCP replaces that tangle of one-off code with a single standard. As Anthropic describes, MCP “provides a universal, open standard for connecting AI systems with data sources, replacing fragmented integrations with a single protocol”. In practice, this means agents can query up-to-date information or invoke tools on the fly – making them far more capable and reliable in real-world tasks.

MCP also brings other benefits for AI builders. It comes with pre-built integrations (servers) for common services, so models can “plug in” without custom work. It lets teams switch between different LLM providers, since the protocol is model agnostic. And it enforces best practices for security and permissions, ensuring agents only access data they’re allowed to see. In short, MCP moves us from isolated, hypothetical chatbots to fully context-aware assistants that can securely do things in the real world.

How MCP Works: Hosts, Clients, and Servers

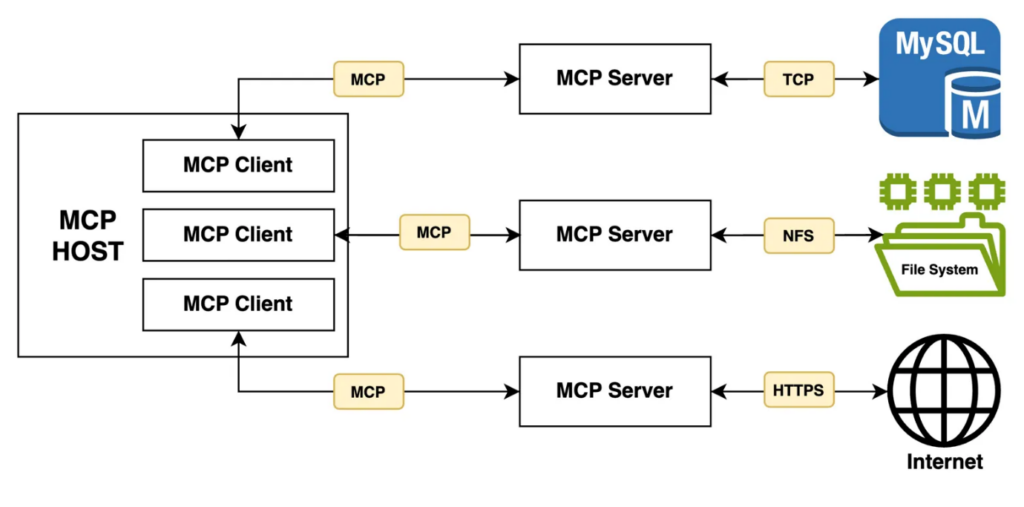

At its core, MCP is a client–server architecture for AI agents. An AI-powered application (the host) can open connections to multiple MCP servers, each of which wraps a particular data source or tool. For example, your host might be a chat interface or an IDE with AI features, and you could have MCP servers for your local file system, a database, or a web API.

- MCP Hosts: These are AI applications or interfaces (like Claude Desktop, IDE plugins, or agent UIs) that want to fetch information or trigger actions. The host initiates connections and user requests.

- MCP Clients: When a host connects to a server, it creates a protocol client instance. Each client maintains a one-to-one session with a specific MCP server, handling the exchange of messages. The client translates the host’s needs into MCP requests and relays the server’s responses back.

- MCP Servers: These are lightweight connector programs, each focused on one capability or tool. An MCP server might expose a file system (so the AI can read your documents), a database, a web search tool, or any other service. It “speaks” MCP on one side and communicates with the actual service (via APIs, SDKs, or local libraries) on the other. By coding one MCP server, a developer makes that service instantly usable by any compliant host.

Each MCP server advertises what it can do: a set of callable tools (functions), data resources to query, or prompt templates. The host (via its client) can then invoke those tools by name. Because all communication is standardized, the host doesn’t need custom logic for each system – it just issues MCP requests and gets back structured results.

Servers can fetch from local data (your files, local databases, etc.) or remote services (cloud APIs, SaaS apps). For instance, a “GitHub MCP server” could use GitHub’s API to list issues or create pull requests, while a “Slack MCP server” could post messages to channels. The protocol also handles security and consent: the host only gains the level of access the server provides. All of this happens in real time, usually over a JSON-RPC transport, so the AI agent can chain calls to multiple servers smoothly.

In summary, MCP’s architecture lets developers reuse integrations. Instead of writing a new connection for every AI feature, you write one MCP server per tool. Any MCP aware AI app (host) can then use that server’s capabilities. This decoupling is the core idea: hosts talk to the protocol, and servers talk through the protocol, making the system plug-and-play.

Real-World Adoption: Who’s Using MCP

Already, a range of companies and projects are experimenting with MCP. Anthropic notes that Block (financial services) and Apollo (GraphQL data platform) have integrated MCP into their systems, and developer tool firms like Zed, Replit, Codeium, and Sourcegraph are building MCP support to enhance AI workflows. For example, Replit enables AI agents to use MCP so they can read and write code across a user’s files and projects. Apollo uses MCP to let AI agents query structured data (like databases or APIs) directly. Sourcegraph and Codeium plug MCP into their coding tools to retrieve context and suggest smarter code fixes.

Even Microsoft is on board: its Copilot Studio now supports MCP connectors. This means Copilot users can add pre-built MCP “actions” (like a wrapper around an internal API or a CRM) without writing custom code. In other words, business users can point Copilot Studio at an MCP server and immediately get new AI features, all managed behind Microsoft’s enterprise security controls.

Other examples of MCP in action include AI chatbots automatically retrieving and summarizing documents from Google Drive or company wikis, bots posting updates into Slack channels, or coding assistants that open your Git repos, run tests, and commit code – all through MCP servers. The key point is that any AI agent built to use MCP can tap into any MCP server. This interoperability is what makes MCP powerful.

The Future of AI Connectivity with MCP

MCP has quickly emerged as the universal “USBC port” for AI, providing a standardized way for LLMs and agents to connect with data and tools. By replacing dozens of ad-hoc connectors with one open protocol, MCP lets any AI client plug into new data sources with minimal extra work. This unified approach dramatically simplifies integration (solving the classic many-to-many problem) and yields better results – agents can tap live context to produce richer, more relevant responses. An open ecosystem is already taking shape: the official MCP repos include SDKs and registries of servers, and early adopters like Block, Apollo, and leading dev-platforms are integrating MCP into their products.

MCP therefore acts as a crucial bridge between sophisticated LLMs and real-world systems, helping agents break out of data silos. As one leader puts it, open protocols like MCP “connect AI to real-world applications” and remove the drudgery of routine work so people can “focus on the creative” tasks. Looking ahead, we can imagine AI assistants that seamlessly pull in data from documents, databases, and APIs to automate routine tasks, freeing us to innovate. In short, by linking models to the world, MCP paves the way for the next generation of AI agents – not just smart, but genuinely useful in everyday life.

Conclusion

Building an LLM-powered application to chat with documents offers a revolutionary way to interact with and extract information from large volumes of text, particularly within PDF files. By leveraging tools like Langchain, FAISS, and advanced language models from OpenAI or HuggingFace, this solution provides a powerful, user-friendly interface for querying document content.

This approach not only streamlines information retrieval but also enhances the accuracy and efficiency of data extraction processes. Whether in business, research, or other fields, the ability to quickly access and converse with document content gives users a significant edge, allowing them to make informed decisions faster. As AI-driven technologies continue to evolve, applications like this will become indispensable tools for navigating the ever-growing sea of digital information.